ModAI

Online platforms seeking data labeling solutions face challenges balancing

Human Data Labeling

When hiring offshore teams is difficult to manage, delivers questionable quality, and costs grow in line with your user base.

Automation

Where automation rarely reaches its full potential due to extreme complexity and lack of ongoing technical support.

“We can’t spend millions of dollars on manual review. We need to focus on high risk content, not manually reviewing every single image.”

– Director of Trust & Safety

Marketplace, 10M Users

“Off the shelf classifiers are giving us bananas and saying they’re explicit. It costs us more to fine tune and hurts user experience.”

– Safety Team Lead

Marketplace, 10M Users

ModAI replaces manual data labeling with a new system that combines expert human judgment with the efficiency of powerful, AI automation.

Cost Reduction

vs. existing BPO

Increase in Quality

from today's baseline

Under 1 week

to implement

Manual data labeling is inefficient and costly.

ModAI cuts costs by 25-50% with high quality and flexibility.

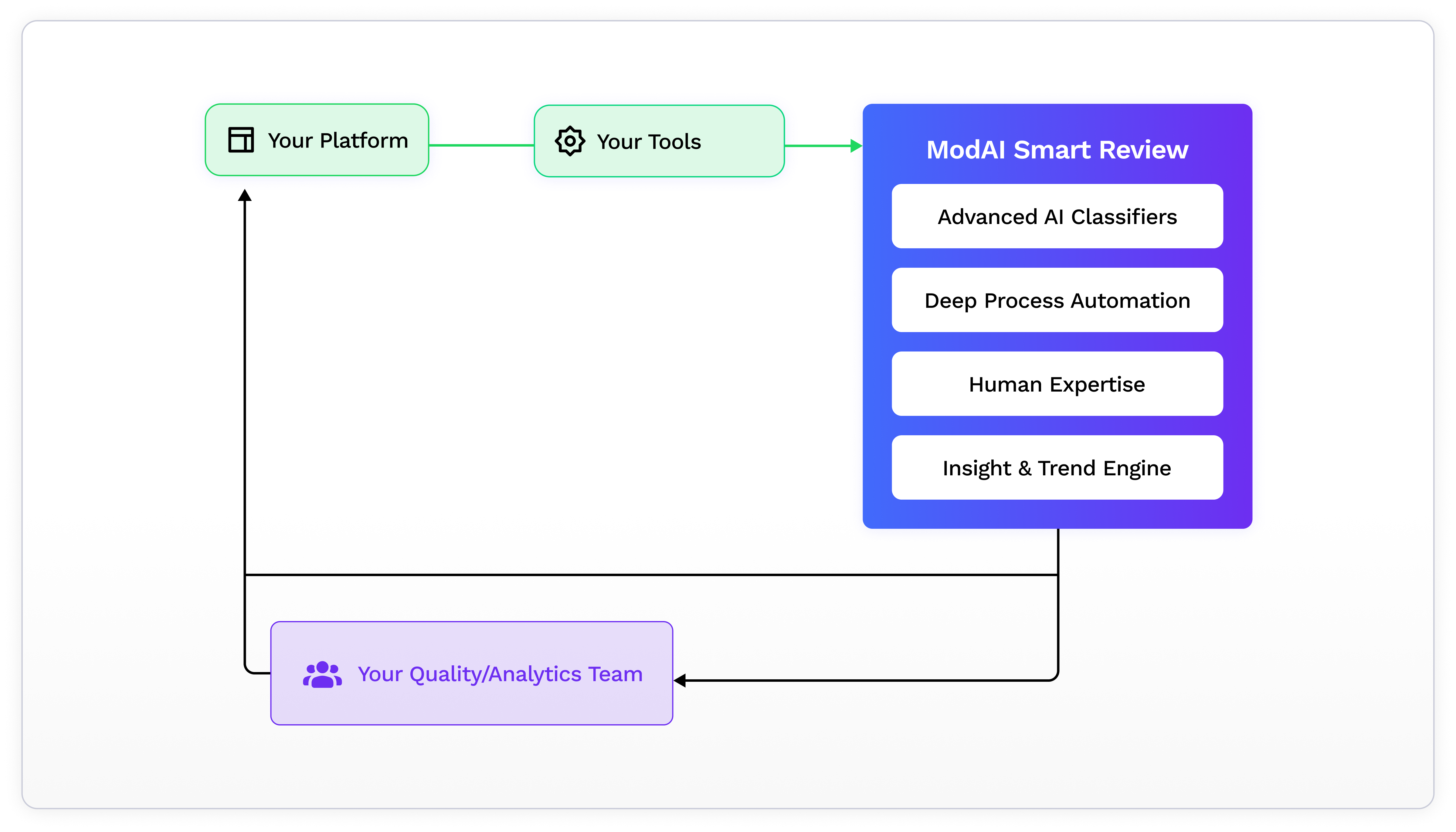

How ModAI works:

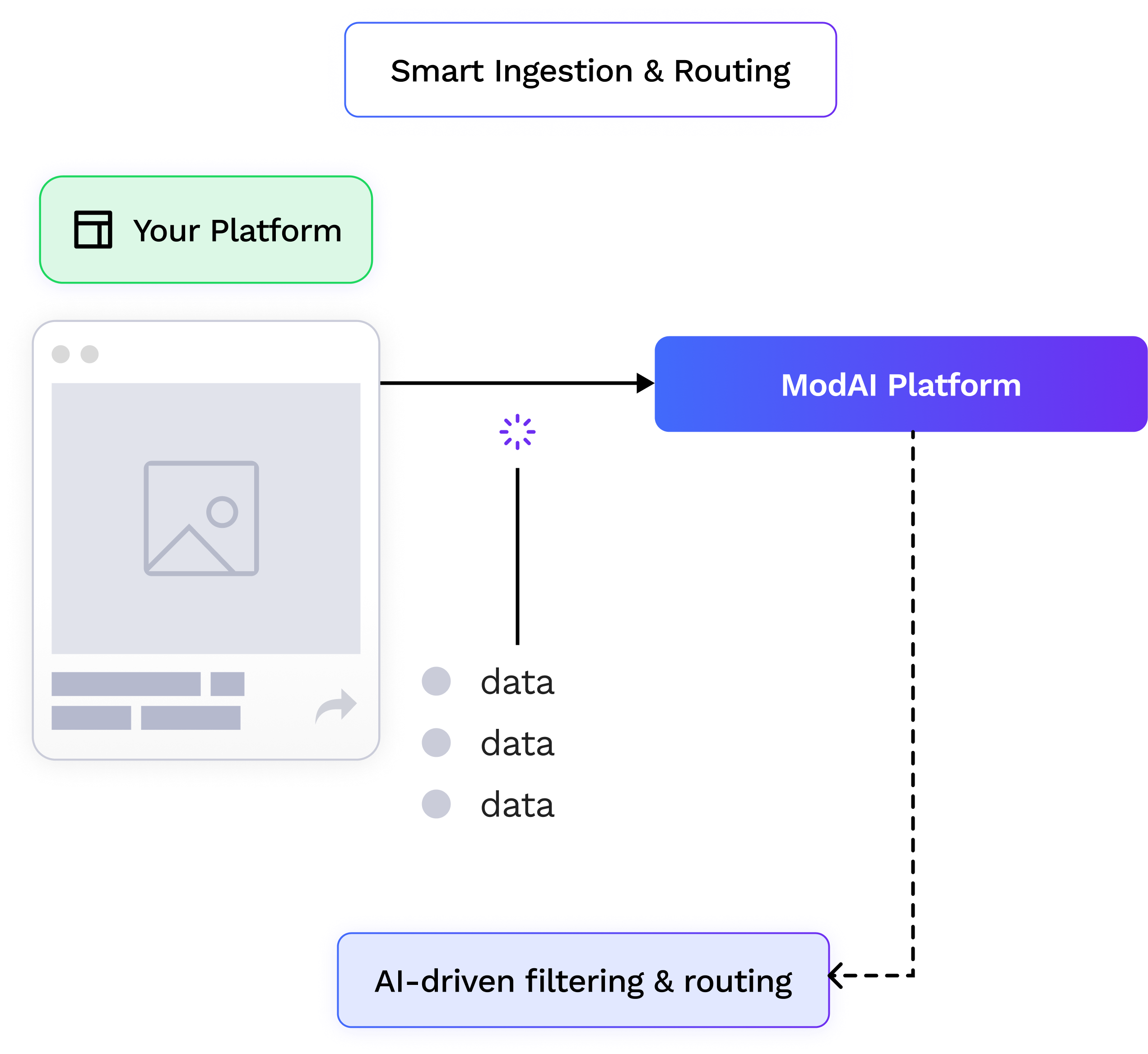

1. Smart Ingestion & Routing

ModAI ingests and routes your data through fine-tuned filters and classifiers. We automatically determine which items can be handled by LLM-as-a-Judge and which need expert human validation.

2. AI + HITL Calibration

ModAI pre-screens and classifies each piece of content, while human experts review edge cases, confirm policy violations, and reinforce quality through contextual judgment.

• Label quality matches that of your best human raters: ensuring consistent, accurate, and reliable data labels.

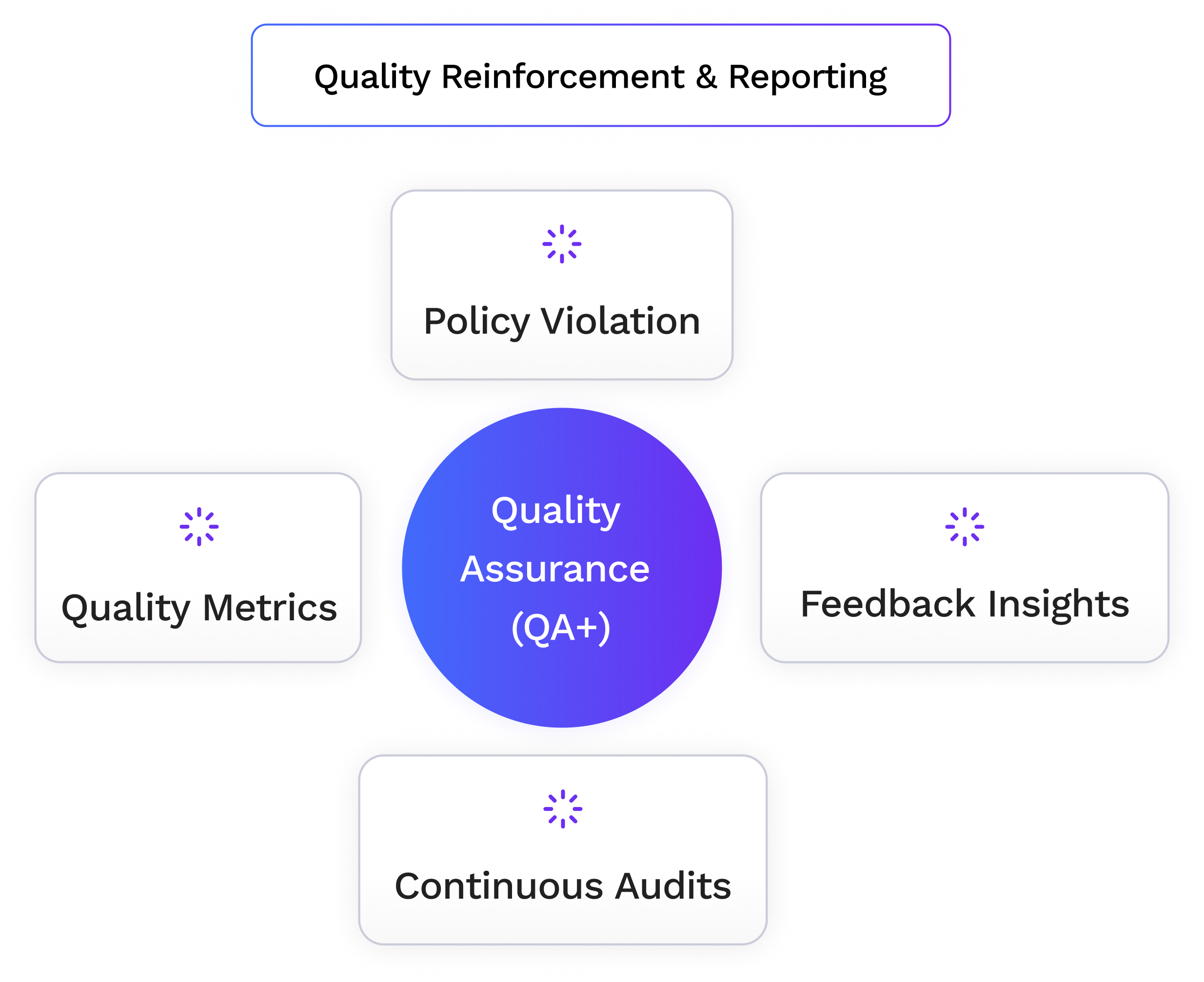

3. Quality Reinforcement & Reporting

Every AI and human decision feeds back into quality metrics and reports. Continuous auditing strengthens accuracy, transparency, and overall trust.

• You get real-time dashboards, audit trails, and data-science-grade metrics.

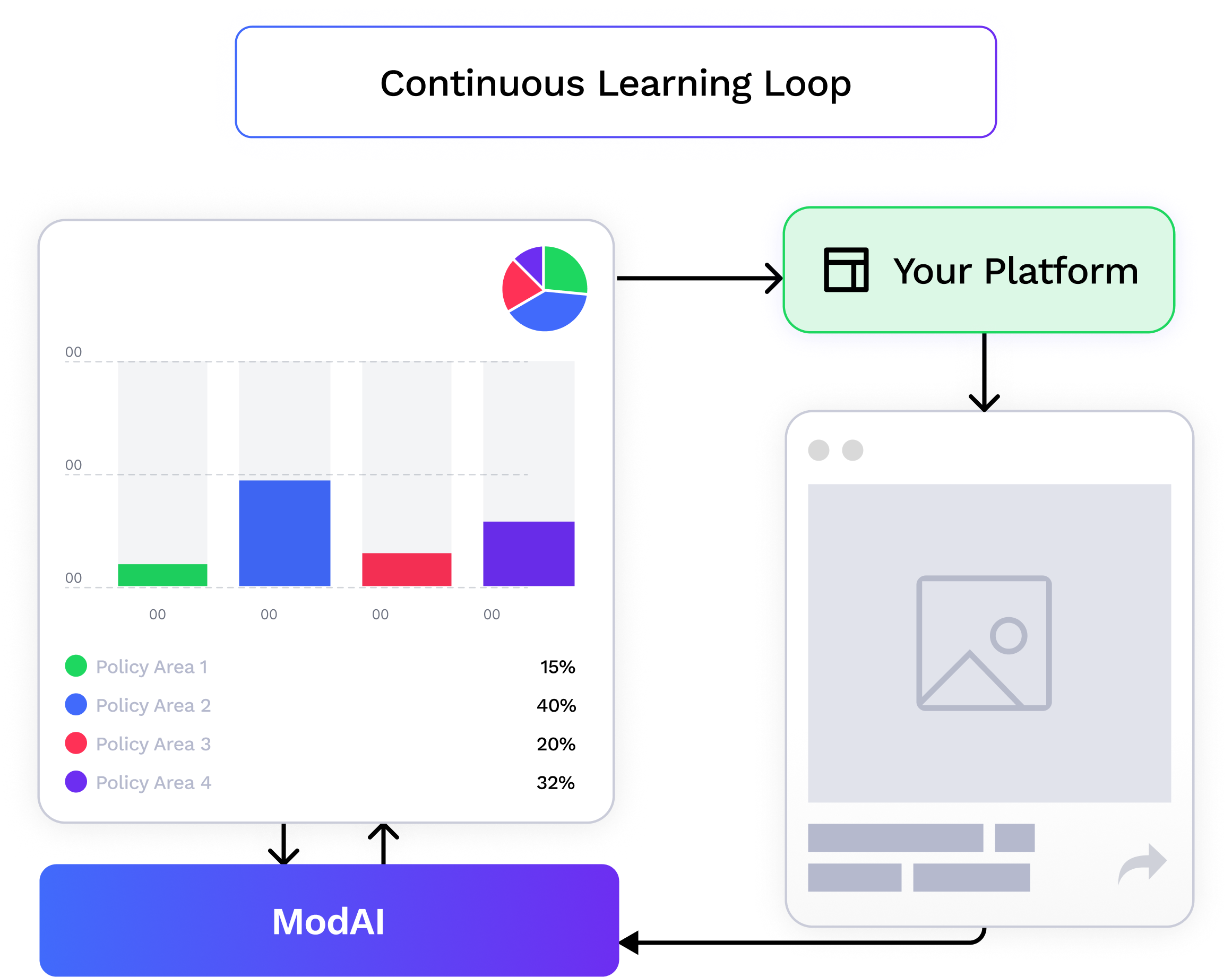

4. Continuous Learning Loop

Insights from audits and human feedback continuously train AI models: improving precision, reducing manual workload, and enhancing performance over time

• Launch in days, easily dial capacity up and down, adjust policies on the fly.

We bring AI innovation and scale to data labeling workflows, so you can...

Get more done while paying less

We automate labeling and moderation tasks to reduce costs and quality loss, freeing up your internal team resources.

Maintain quality of best raters

Label quality matches that of your best human raters. Ensuring consistent, accurate, and reliable content moderation and labeling.

Get insights on your platform & emerging threats

We deliver outstanding value to our clients and delight them with every interaction.

Effortless integration

While APIs provide the best performance and greatest flexibility, we can integrate with any internal or 3rd party moderation and labeling tool currently in use.

ModAI delivers high ROI by transforming labeling workflows.

40%+ savings with ModAI over 12-18 months