SuperviseAI: Trust in AI through Monitoring, Evaluation and Autodidact Learning

Why SuperviseAI?

Trusted Quality Benchmarks

Our LLM-as-a-Judge technology leverages safety-grade taxonomies, nuanced rubrics, and evidence-rich context (not just pass/fail checks).

Human Oversight

We use expert HITL where it matters. Calibrated reviewers, adjudication queues, and IRR/bias checks ensure sensitive judgments are consistent and defensible.

Real-Time, Actionable Reports

We provide full transparency with real-time dashboards, error case audit trails, and data-science grade metrics to clarify agent performance.

Ongoing, Automated Improvement

From monitoring to continuous quality improvement, we close the loop through a reinforcement feedback learning cycle powered by error analysis and human-in-the-loop context.

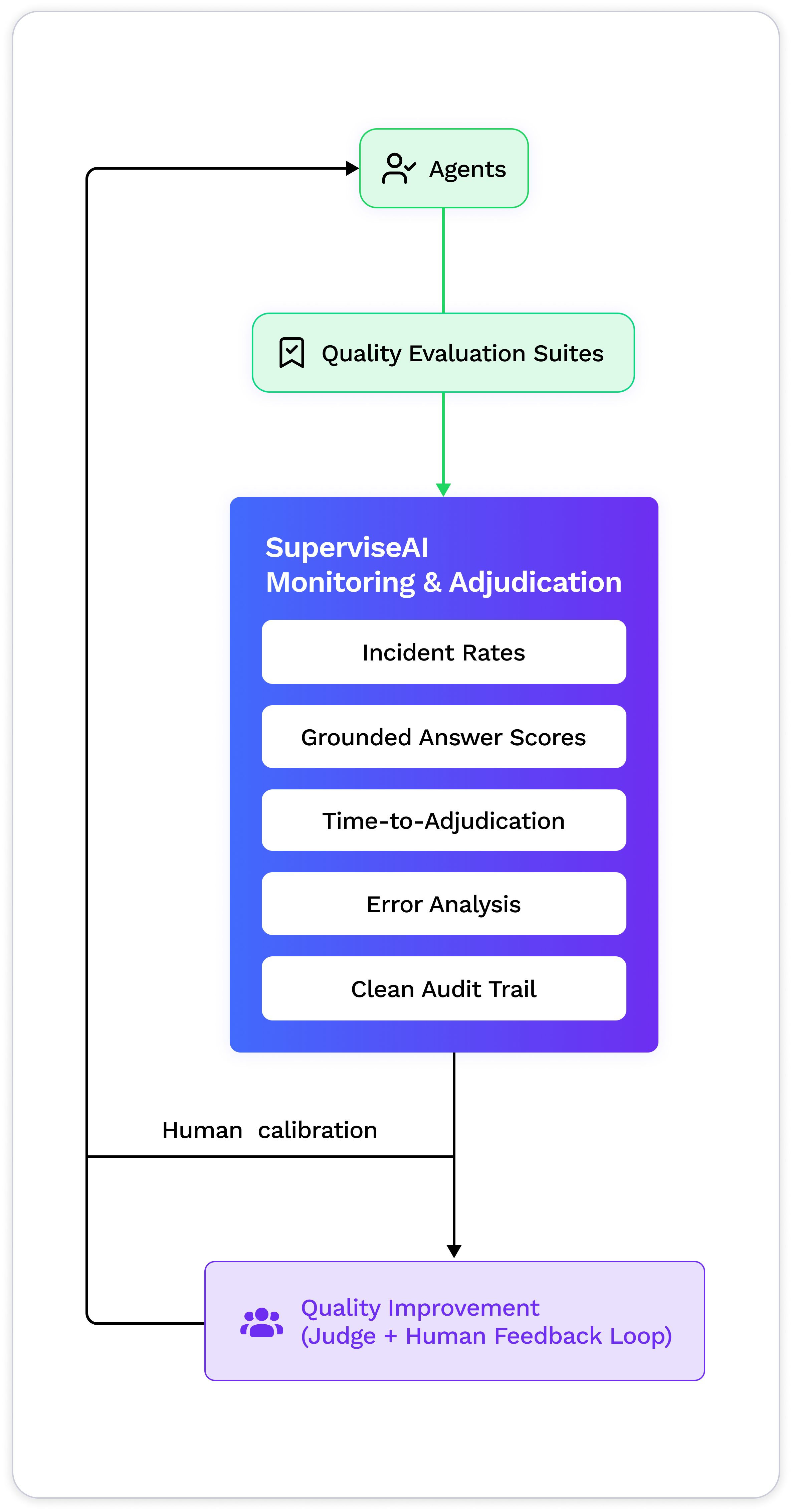

Creating Trust with Quality Measurement

SuperviseAI provides incident rates (safety, leakage, hallucinations), grounded-answer scores, time-to-adjudication, and clear error cases that measure your AI agent quality.

How to Use SuperviseAI

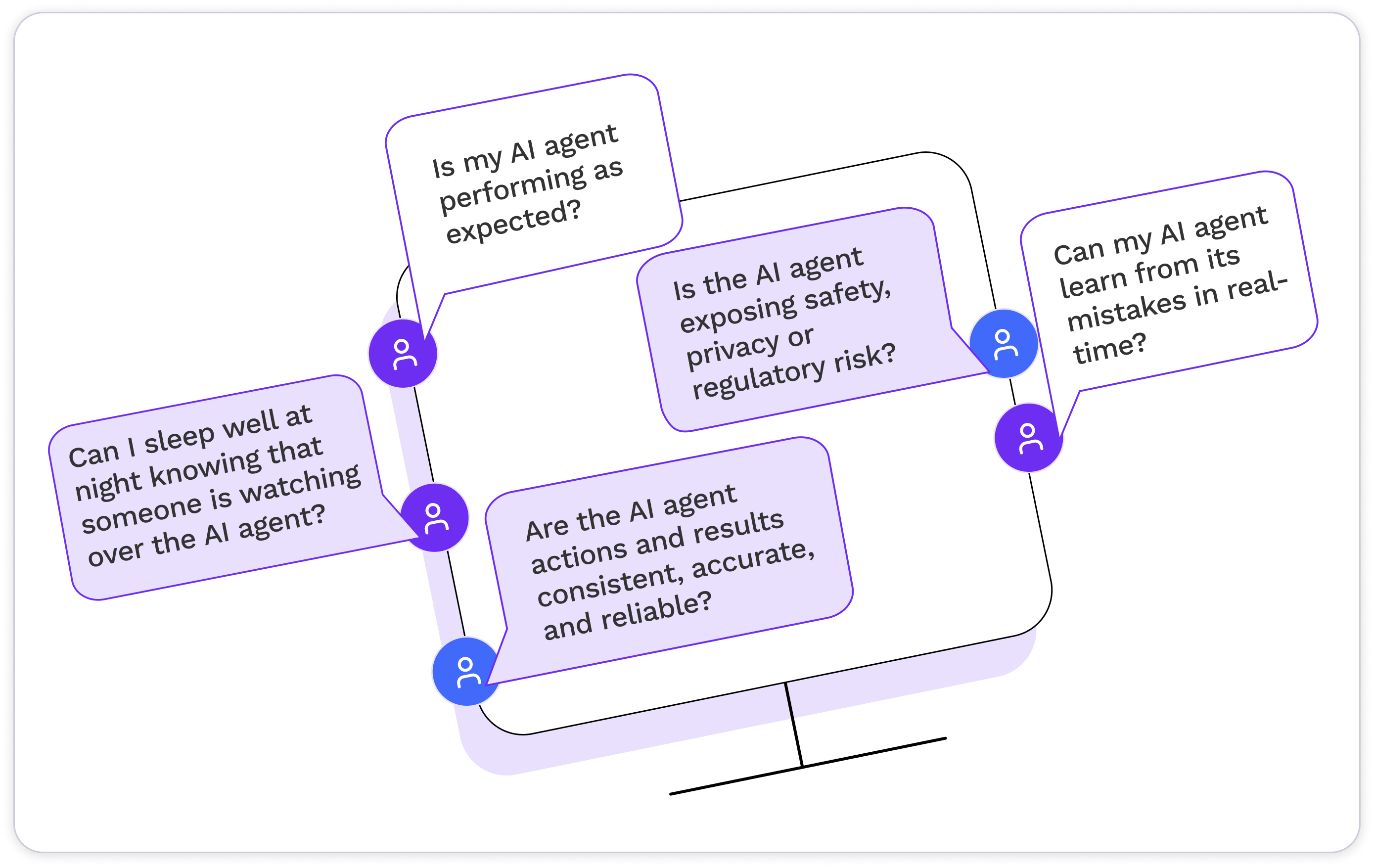

SuperviseAI empowers teams to confidently deploy and manage AI agents by providing continuous oversight, performance evaluation, and real-time learning. It helps you ensure that your AI systems act responsibly, deliver consistent results, and comply with safety and privacy standards.

With SuperviseAI, you gain clear visibility into every action your AI takes so you can trust its performance, correct mistakes instantly, and sleep well knowing your AI agents are always under watch.

Common Evaluators

Out-of-the-box:

Common evaluators out-of-the-box include safety and toxicity checks, PII/PHI leakage and redaction verification, prompt-injection and jailbreak resistance, hallucination and grounding (RAG) assessments, action guardrails for allow/deny/approval, brand, sentiment, and suitability analysis for UGC or ads, as well as bias, harassment, denial, and frustration detection.

Where SuperviseAI Shines

Financial Services

Employee copilot and customer-facing chatbot supervision with audit-ready artifacts.

Healthcare

Monitoring and evaluating AI output for personalized treatment plans and care coordination.

Public Sector & Regulated Industries

Continuous, policy-mapped evaluations with HITL oversight.

UGC Platforms & Advertisers

Policy violation decisions with appeals to reduce over-blocking.

Agentic Startups & Enterprise Teams

Quality monitoring (accuracy, consistency, safety), drift alerts, and improvement loops as you scale agents.

What SuperviseAI Does

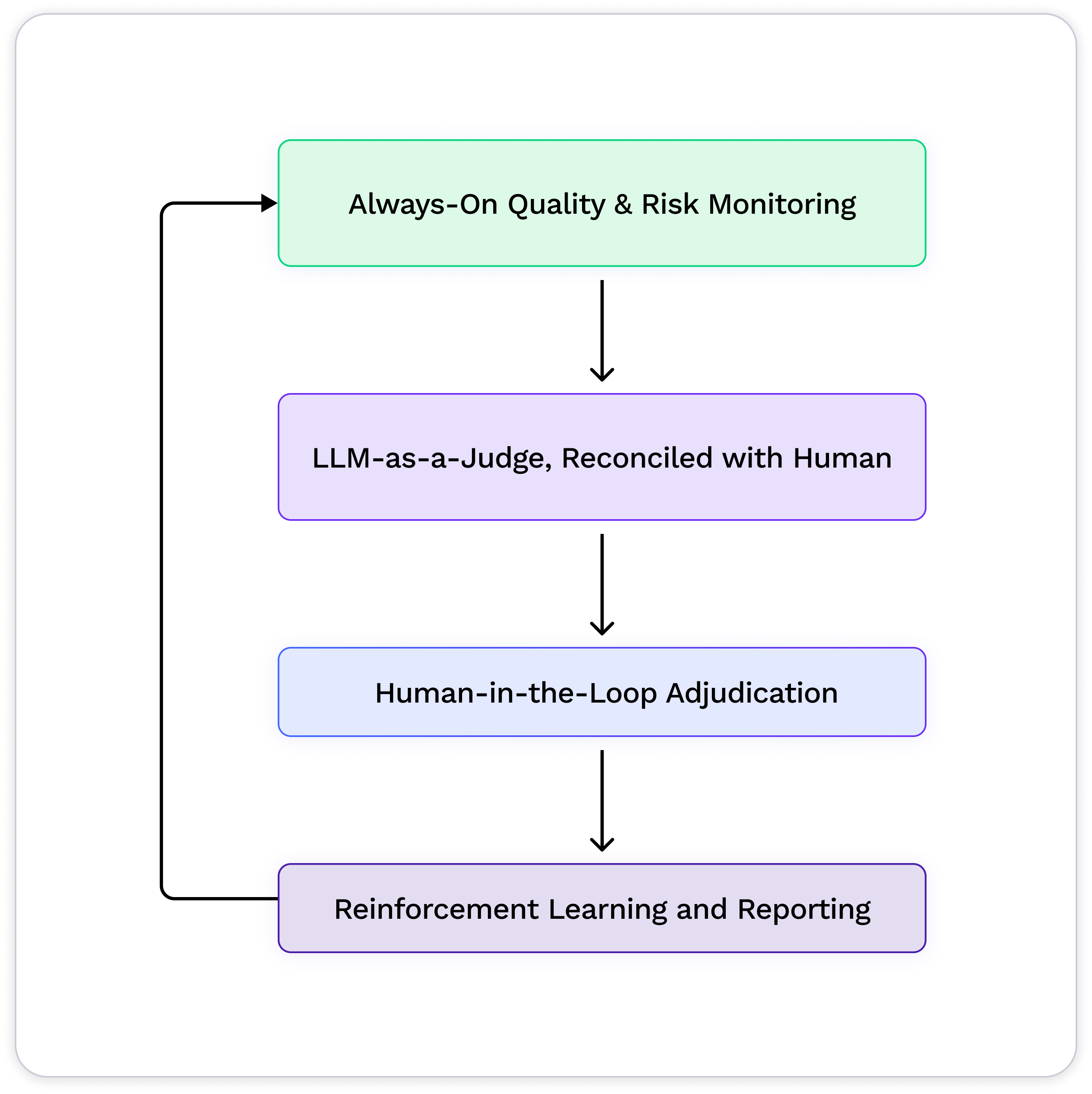

1. Always-On Quality & Risk Monitoring

Continuous, policy-driven evaluations across your critical agent flows (FAQ, search, summarization, ticket reply, actions/tools). Early drift alerts notify you so you can minimize regressions impact on customers.

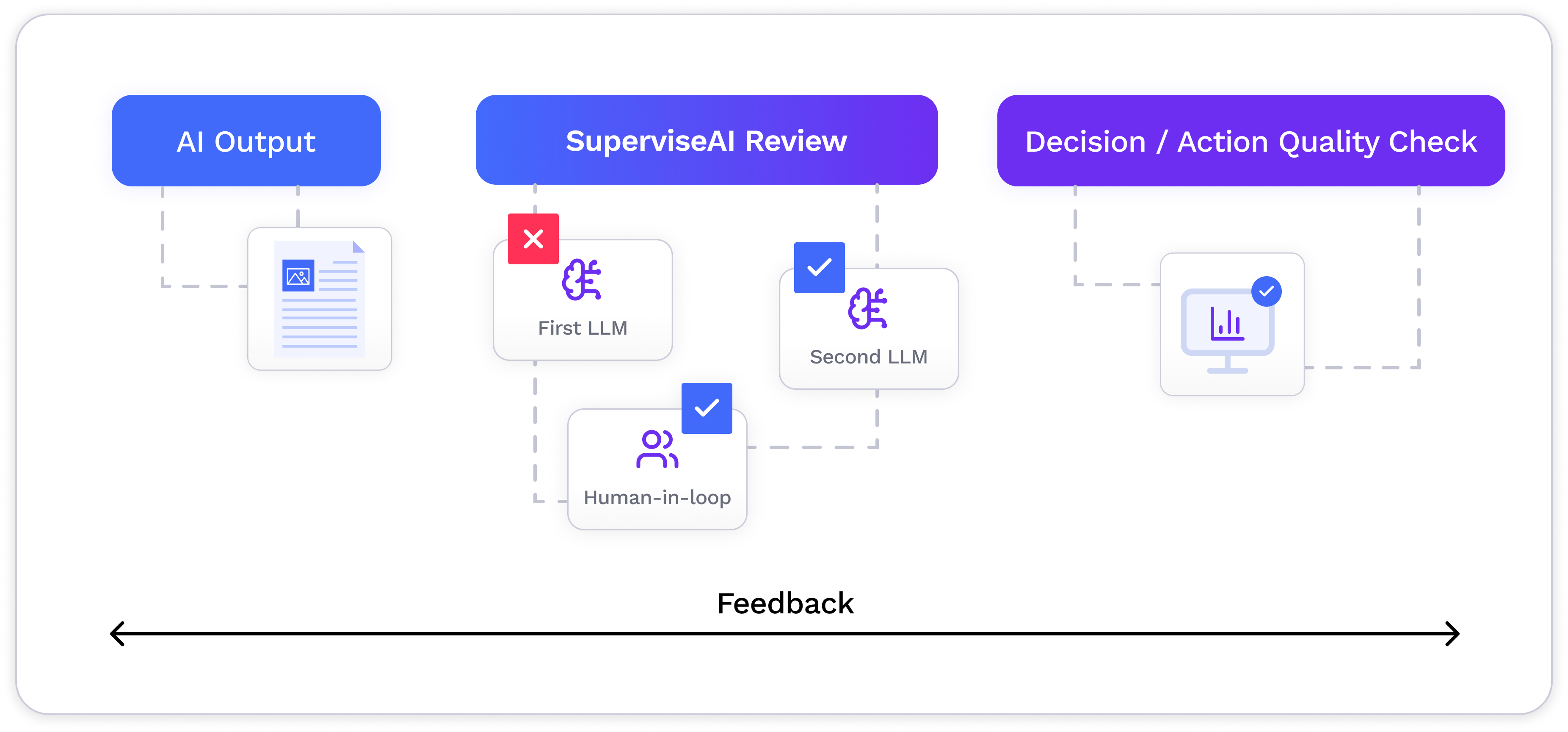

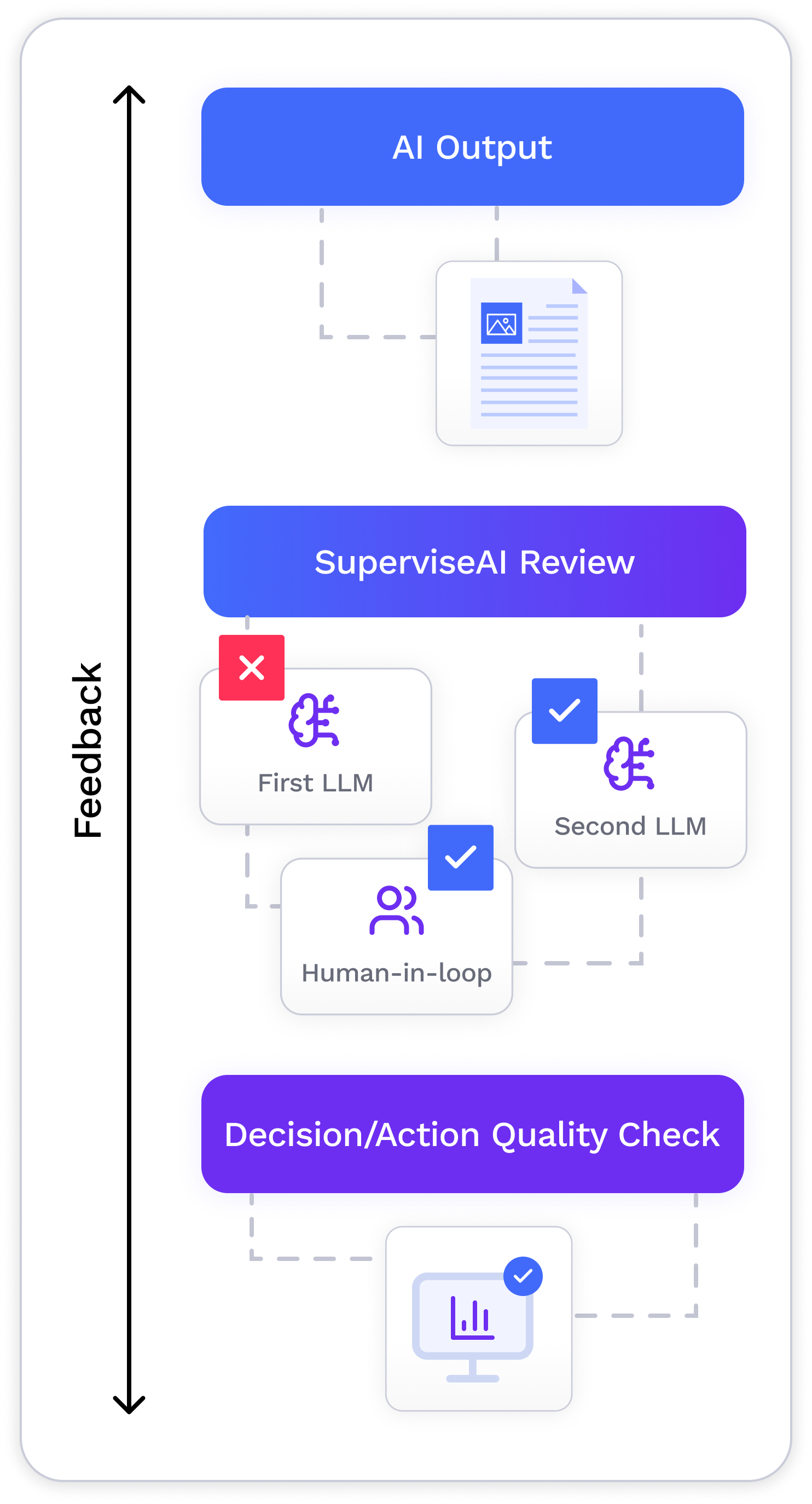

2. LLM-as-a-Judge, Reconciled with Humans

Policy-driven classifiers judge the quality measures that are most important to your business, like completeness, tone, bias, and safety / no-harm. All quality decisions include human-readable, step-by-step rationales for transparency.

3. Human-in-the-Loop Adjudication

SuperviseAI routes disagreements and high-risk items to expert reviewers (your employees or outsourced to TrustLab or a 3rd party) with calibration, κ/IRR tracking, and dispute resolution workflows, then syncs final verdicts back to agent developers.

4. Reinforcement Learning and Reporting

Policy mapping, scorecards and datasets are self-serve accessible and exportable so you know how your AI agent’s quality is measuring up, agent developers know how to improve quality, and your compliance/oversight reports are always current.

Test & Integrate SuperviseAI in Minutes

1. Connect your agents via API/MCP

2. Select quality evaluation suites

3. Observe LLM judge disagreements

4. Adjudicate with expert reviewers

5. Leverage judge+human feedback

6. See proof of quality improvement

Our Differentiation:

Certified AI Agent Quality

Know what your AI agent does with certainty

Our roots in nuanced, subjective, and high-stakes judgments (beyond “routine” labeling) give you safety-grade accuracy with less human review because we target the right samples.

Visualize AI agent health in dashboards

Gain instant clarity into how your AI agents perform, behave, and improve with live metrics that turn invisible risks into actionable insights.

Auditor-ready from day one

Versioned suites, evidence bundles, and clear error case analysis without months of bespoke work.

Automate AI agent learning processes

Continuously optimize your AI agents with closed-loop feedback and reinforcement learning that scales quality without manual retraining.

Trust through Evidence: Metrics that Matter

Quality & Safety

• Safety Pass Rate

• Leakage Index

• Grounding Score

Reliability & Performance

• Jailbreak Resistance

• Action Error Rate

• Approval SLA

Human Review Quality

• IRR (κ)

• Dispute overturn %

• Time-to-adjudication (TTA)

Monitoring & Compliance

• Drift

• Regression alerts

• Release-gate status

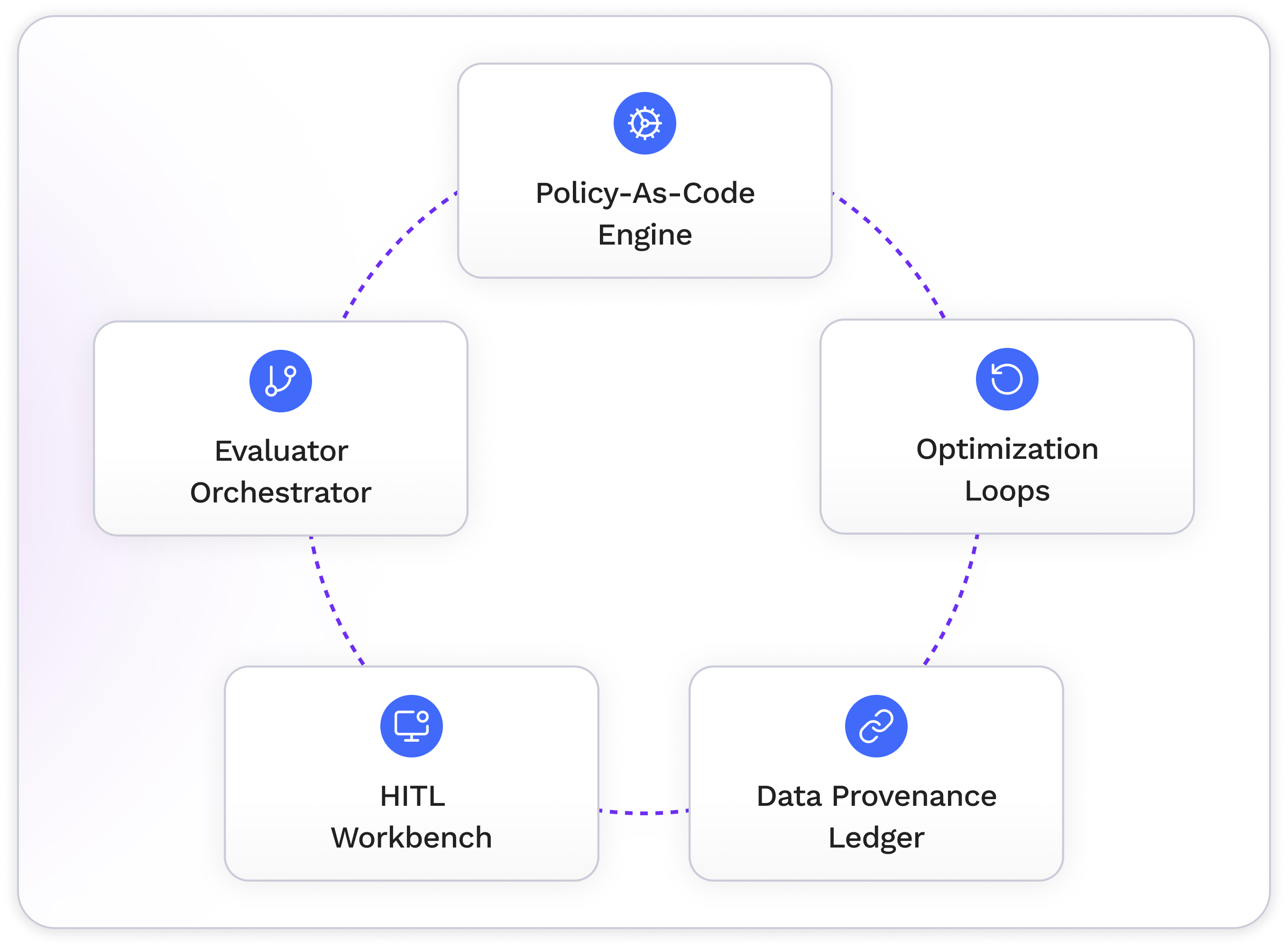

Key Capabilities

• Policy-as-Code Engine: Versioned policies, taxonomies, and thresholds with change history and stakeholder sign-off.

• Evaluator Orchestrator: Batch/CI runs, scheduled jobs, sampling plans, drift detection, and “LLM-judge vs. human” reconciliation.

• HITL Workbench: Calibration sets, κ/IRR, dispute handling, annotator QA metrics, bias dashboards.

• Data Provenance Ledger: Source & consent tracking, dataset datasheets, acceptance criteria, and immutable logs.

• Optimization Loops: Preference data capture, reinforcement learning hooks to raise scores, not just measure them.

Plan Options

Pilot

2 Evaluation Suites

1 Environment

25k Evals/Mo

Core Reports

Check Our Performance based on a Free Pilot

Pro

5 Evaluation Suites

3 Environments

100k Evals/Mo

HITL Workbench

Evidence Bundles

Enterprise

Custom Evaluation Suites

Unlimited Environments

Enhanced SLAs

Dedicated Support

Frequently Asked Questions

Regulated and experience-sensitive domains (financial services, healthcare, legal, e-commerce, social marketplaces, and copyright holders) community platforms, and agent-forward SaaS where quality truly affects outcomes.

In ambiguous, high-impact, or edge-case scenarios. We combine active sampling with calibrated reviewers, measure κ/IRR, and feed outcomes back into training and prompts.

Yes. Use our standard regulatory evaluation packs for faster time-to-audit: versioned evaluations suites, evidence bundles, and data provenance ledger out of the box.

We design prompts around your criteria, use binary/low-precision scoring, add rationales, and validate against a labeled seed set, then reconcile with human adjudication for ongoing alignment.